Enterprises are sitting on mountains of information however most of it’s gathering digital mud. The so-called “information lake” that was imagined to be a gold mine typically seems to be extra like a swamp: murky, unstructured, and practically not possible to navigate. On the identical time, leaders hold listening to about generative AI, LLMs, and copilots that may remodel industries, but plugging them into messy information ecosystems looks like making an attempt to drop a rocket engine onto a rowboat.

That is the place the Lakehouse + LLM shift is available in. Consider it as rebuilding your complete metropolis’s infrastructure after which putting in an AI mayor who is aware of each avenue, each constructing, and each resident in actual time. Instantly, your information is now not a static archive. It’s alive, continually producing insights, automating choices, and predicting strikes earlier than you even ask the query.

The businesses betting on this structure should not simply cleansing up information issues. They’re creating software program merchandise price billion-dollar, quicker choices, and industries that don’t merely react. They anticipate. The query isn’t whether or not this future is coming. It’s whether or not your enterprise can be working it, or working to catch up.

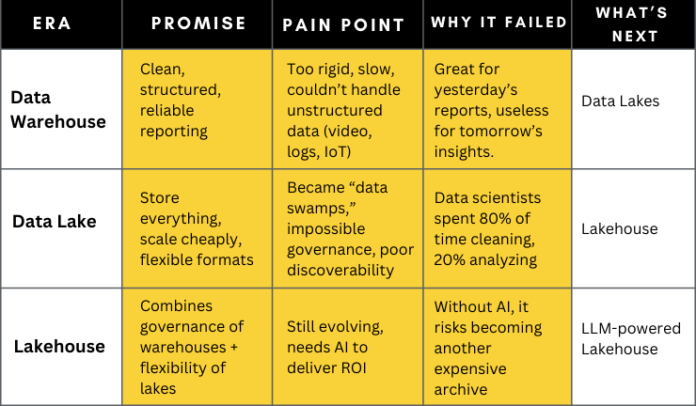

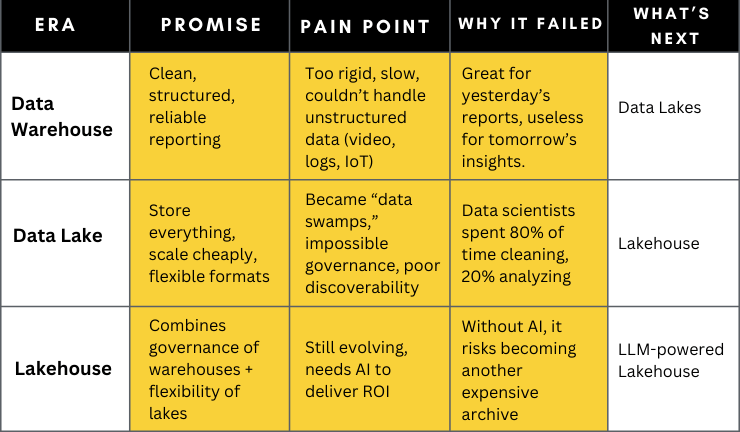

The Evolution: From Warehouses → Lakes → Lakehouses

The place LLMs Slot in Enterprise Information Technique

Most enterprises have constructed information platforms that look spectacular on a slide deck however crumble when requested a easy enterprise query in actual time. Executives need solutions, not dashboards that take six weeks to configure. That is the place massive language fashions (LLMs) change the sport.

LLMs act because the translator between uncooked information and human decision-making. As an alternative of SQL queries, pivot tables, and limitless information prep, leaders can merely ask: “What have been final quarter’s buyer churn patterns, and what actions ought to we take to scale back them?” The mannequin pulls from structured and unstructured sources contained in the Lakehouse and serves up clear insights in plain English.

The Large Gamers Driving This Shift:

- Databricks: Championing the Lakehouse imaginative and prescient with AI-native tooling baked into their platform.

- Snowflake: Evolving from information warehouse large to an AI-ready cloud information powerhouse.

- AWS Lake Formation + Bedrock: Bringing Lakehouse governance with built-in entry to generative AI fashions.

- Google BigQuery + Vertex AI: Marrying analytics muscle with superior AI pipelines.

- Microsoft Cloth + Azure OpenAI: Constructing the bridge for enterprises already deep within the Microsoft ecosystem.

The Challenges Enterprises Should Remedy Earlier than Adoption

Generative AI in a Lakehouse isn’t magic. It’s energy with pitfalls. Listed below are the 4 important challenges each enterprise faces and the pragmatic options to beat them.

A. Safety and Compliance Nightmares

The Problem: Enterprises maintain delicate information, monetary information, affected person information, mental property, that regulators guard fiercely. Feeding it into LLMs with out safeguards dangers lawsuits, fines, and model injury.

The Resolution: Hold AI contained in the firewall. Deploy personal LLMs fine-tuned on enterprise information, implement strict role-based entry, and apply compliance frameworks (GDPR, HIPAA, PCI-DSS) immediately into your Lakehouse pipelines. Safety-first architectures don’t gradual you down, they shield your license to function.

B. Belief and Hallucinations

The Problem: LLMs are good, however in addition they “AI hallucinate.” In enterprise, a fabricated perception can imply dangerous technique or regulatory publicity. Executives is not going to belief fashions that make issues up.

The Resolution: Introduce a validation layer. Each AI-generated output should be fact-checked in opposition to supply information within the Lakehouse. Construct human-in-the-loop approval for high-stakes outputs, and apply explainable instruments so determination makers perceive why a mannequin made a name. Transparency builds belief.

C. Runaway Cloud Prices

The Problem: Petabyte-scale information plus LLM queries equals cloud invoices that spiral uncontrolled. CFOs lose persistence quick when “AI innovation” reveals up as a line merchandise bigger than income progress.

The Resolution: Optimize earlier than you question. Use tiered storage, caching, and pre-computed embeddings so that you don’t hammer uncooked information each time. Set price alerts and allocate AI budgets by enterprise unit. Run ROI fashions side-by-side with AI pilots to show monetary worth earlier than scaling.

D. The Expertise Hole

The Problem: Most enterprises don’t have the talent combine to engineer Lakehouse + LLM ecosystems. Information engineers know lakes. ML engineers know fashions. Few know each. This slows adoption and will increase threat.

The Resolution: Construct hybrid groups. Upskill inner expertise by means of AI engineering bootcamps and partnerships. The place gaps stay, usher in fractional AI expertise or specialised companions to speed up builds. Consider it like renting rocket scientists till your personal workforce can fly the shuttle.

Future Developments: Lakehouses + AI in 2025 and Past

From Predictive to Prescriptive

Information Analytics has all the time requested, “What’s going to occur?” Generative AI modifications the query to, “What ought to we do about it?” Count on Lakehouse copilots that not solely flag churn dangers however auto-design retention campaigns, not solely forecast demand however set off provide chain changes in actual time.

Business-Particular Copilots

Horizontal AI is highly effective, however the true worth lies in specialization. We’ll see healthcare Lakehouses that talk HIPAA, finance copilots fluent in Basel III, and retail copilots that auto-generate promotions by the hour. Area-trained LLMs are the longer term moat for enterprises.

Autonomous Information Pipelines

Handbook ETL and limitless cleaning cycles will fade. AI brokers will monitor ingestion, detect anomalies, clear information on the fly, and doc lineage with out human intervention. Information pipelines will primarily handle themselves, releasing engineers to give attention to innovation as a substitute of firefighting.

Multi-Cloud and Hybrid by Default

Enterprises will reject lock-in. The long run is information Lakehouses that span AWS, Azure, and GCP concurrently, with AI orchestration making certain workloads run the place they’re quickest and least expensive. CIOs received’t select one platform, they’ll orchestrate all of them.

AI Governance Turns into a Boardroom Agenda

Proper now, AI governance is an IT afterthought. In 2025, it’s a boardroom mandate. Audit trails for each mannequin determination, explainable dashboards for executives, and moral oversight committees can be as widespread as monetary audits.

From Information Swamps to Clever Ecosystems

A Lakehouse with out AI is simply one other costly archive. LLMs with out ruled information are toys that break in manufacturing. The long run belongs to enterprises that mix each right into a system constructed for pace, belief, and scale.

That’s the place ISHIR is available in. Our Information & AI Accelerators assist enterprises minimize by means of the noise, engineer AI-powered Lakehouses, and ship measurable enterprise outcomes, not simply proofs of idea. From technique to implementation, we construct the info spine and AI copilots that flip uncooked information into aggressive benefit.

In case your enterprise is able to transfer from dusty information lakes to clever, AI-native ecosystems, it’s time to cease speaking about potential and begin constructing it.

Able to reimagine your enterprise information technique?

Let’s engineer your AI-powered Lakehouse.