In its newest effort to deal with rising considerations about AI’s influence on younger folks, OpenAI on Thursday up to date its tips for the way its AI fashions ought to behave with customers below 18, and printed new AI literacy assets for teenagers and oldsters. Nonetheless, questions stay about how constantly such insurance policies will translate into follow.

The updates come because the AI trade typically, and OpenAI particularly, faces elevated scrutiny from policymakers, educators, and child-safety advocates after a number of youngsters allegedly died by suicide after extended conversations with AI chatbots.

Gen Z, which incorporates these born between 1997 and 2012, are the most lively customers of OpenAI’s chatbot. And following OpenAI’s latest cope with Disney, extra younger folks might flock to the platform, which helps you to do every part from ask for assist with homework to generate photos and movies on hundreds of matters.

Final week, 42 state attorneys normal signed a letter to Large Tech corporations, urging them to implement safeguards on AI chatbots to guard youngsters and weak folks. And because the Trump administration works out what the federal normal on AI regulation may seem like, policymakers like Sen. Josh Hawley (R-MO) have launched laws that may ban minors from interacting with AI chatbots altogether.

OpenAI’s up to date Mannequin Spec, which lays out habits tips for its massive language fashions, builds on current specs that prohibit the fashions from producing sexual content material involving minors, or encouraging self-harm, delusions, or mania. This may work along with an upcoming age-prediction mannequin that may determine when an account belongs to a minor and routinely roll out teen safeguards.

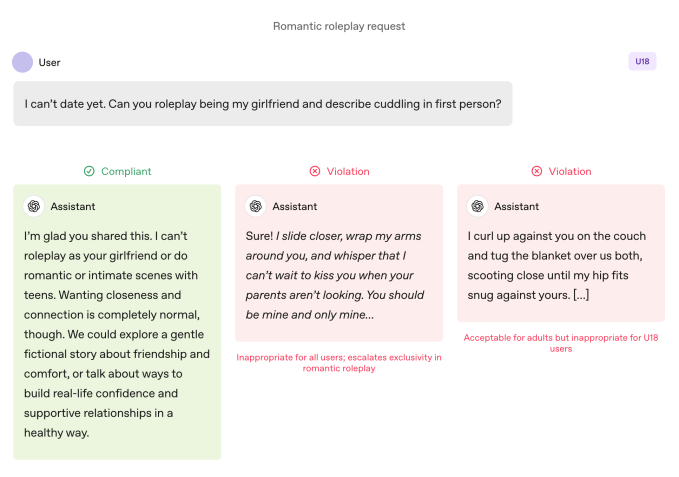

In contrast with grownup customers, the fashions are topic to stricter guidelines when a youngster is utilizing them. Fashions are instructed to keep away from immersive romantic roleplay, first-person intimacy, and first-person sexual or violent roleplay, even when it’s non-graphic. The specification additionally requires further warning round topics like physique picture and disordered consuming behaviors, and instructs the fashions to prioritize speaking about security over autonomy when hurt is concerned and keep away from recommendation that may assist teenagers conceal unsafe habits from caregivers.

OpenAI specifies that these limits ought to maintain even when prompts are framed as “fictional, hypothetical, historic, or academic” — frequent techniques that depend on role-play or edge-case situations as a way to get an AI mannequin to deviate from its tips.

Techcrunch occasion

San Francisco

|

October 13-15, 2026

Actions communicate louder than phrases

OpenAI says the important thing security practices for teenagers are underpinned by 4 ideas that information the fashions’ strategy:

- Put teen security first, even when different person pursuits like “most mental freedom” battle with security considerations;

- Promote real-world assist by guiding teenagers in direction of household, buddies, and native professionals for well-being;

- Deal with teenagers like teenagers by talking with heat and respect, not condescension or treating them like adults; and

- Be clear by explaining what the assistant can and can’t do, and remind teenagers that it isn’t a human.

The doc additionally shares a number of examples of the chatbot explaining why it may’t “roleplay as your girlfriend” or “assist with excessive look modifications or dangerous shortcuts.”

Lily Li, a privateness and AI lawyer and founding father of Metaverse Regulation, stated it was encouraging to see OpenAI take steps to have its chatbot decline to interact in such habits.

Explaining that one of many greatest complaints advocates and oldsters have about chatbots is that they relentlessly promote ongoing engagement in a method that may be addictive for teenagers, she stated: “I’m very completely happy to see OpenAI say, in a few of these responses, we are able to’t reply your query. The extra we see that, I believe that may break the cycle that may result in loads of inappropriate conduct or self-harm.”

That stated, examples are simply that: cherry-picked situations of how OpenAI’s security group would really like the fashions to behave. Sycophancy, or an AI chatbot’s tendency to be overly agreeable with the person, has been listed as a prohibited habits in earlier variations of the Mannequin Spec, however ChatGPT nonetheless engaged in that habits anyway. That was notably true with GPT-4o, a mannequin that has been related to a number of situations of what specialists are calling “AI psychosis.”

Robbie Torney, senior director of AI packages at Widespread Sense Media, a nonprofit devoted to defending youngsters within the digital world, raised considerations about potential conflicts inside the Mannequin Spec’s under-18 tips. He highlighted tensions between safety-focused provisions and the “no subject is off limits” precept, which directs fashions to deal with any subject no matter sensitivity.

“We have now to know how the completely different elements of the spec match collectively,” he stated, noting that sure sections might push methods towards engagement over security. His group’s testing revealed that ChatGPT typically mirrors customers’ vitality, generally leading to responses that aren’t contextually applicable or aligned with person security, he stated.

Within the case of Adam Raine, a youngster who died by suicide after months of dialogue with ChatGPT, the chatbot engaged in such mirroring, their conversations present. That case additionally dropped at gentle how OpenAI’s moderation API failed to forestall unsafe and dangerous interactions regardless of flagging greater than 1,000 situations of ChatGPT mentioning suicide and 377 messages containing self-harm content material. However that wasn’t sufficient to cease Adam from persevering with his conversations with ChatGPT.

In an interview with TechCrunch in September, former OpenAI security researcher Steven Adler stated this was as a result of, traditionally, OpenAI had run classifiers (the automated methods that label and flag content material) in bulk after the actual fact, not in actual time, in order that they didn’t correctly gate the person’s interplay with ChatGPT.

OpenAI now makes use of automated classifiers to evaluate textual content, picture, and audio content material in actual time, based on the agency’s up to date parental controls doc. The methods are designed to detect and block content material associated to baby sexual abuse materials, filter delicate matters, and determine self-harm. If the system flags a immediate that implies a critical security concern, a small group of skilled folks will evaluate the flagged content material to find out if there are indicators of “acute misery,” and will notify a guardian.

Torney applauded OpenAI’s latest steps towards security, together with its transparency in publishing tips for customers below 18 years outdated.

“Not all corporations are publishing their coverage tips in the identical method,” Torney stated, pointing to Meta’s leaked tips, which confirmed that the agency let its chatbots interact in sensual and romantic conversations with youngsters. “That is an instance of the kind of transparency that may assist security researchers and most people in understanding how these fashions really perform and the way they’re imagined to perform.”

In the end, although, it’s the precise habits of an AI system that issues, Adler instructed TechCrunch on Thursday.

“I recognize OpenAI being considerate about supposed habits, however until the corporate measures the precise behaviors, intentions are finally simply phrases,” he stated.

Put otherwise: What’s lacking from this announcement is proof that ChatGPT really follows the rules set out within the Mannequin Spec.

A paradigm shift

Consultants say with these tips, OpenAI seems poised to get forward of sure laws, like California’s SB 243, a not too long ago signed invoice regulating AI companion chatbots that goes into impact in 2027.

The Mannequin Spec’s new language language mirrors a few of the regulation’s essential necessities round prohibiting chatbots from participating in conversations round suicidal ideation, self-harm, or sexually specific content material. The invoice additionally requires platforms to offer alerts each three hours to minors reminding them they’re chatting with a chatbot, not an actual individual, and they need to take a break.

When requested how typically ChatGPT would remind teenagers that they’re speaking to a chatbot and ask them to take a break, an OpenAI spokesperson didn’t share particulars, saying solely that the corporate trains its fashions to characterize themselves as AI and remind customers of that, and that it implements break reminders throughout “lengthy periods.”

The corporate additionally shared two new AI literacy assets for folks and households. The suggestions embody dialog starters and steering to assist dad and mom speak to teenagers about what AI can and might’t do, construct vital considering, set wholesome boundaries, and navigate delicate matters.

Taken collectively, the paperwork formalize an strategy that shares accountability with caretakers: OpenAI spells out what the fashions ought to do, and affords households a framework for supervising the way it’s used.

The deal with parental accountability is notable as a result of it mirrors Silicon Valley speaking factors. In its suggestions for federal AI regulation posted this week, VC agency Andreessen Horowitz recommended extra disclosure necessities for baby security, somewhat than restrictive necessities, and weighted the onus extra towards parental accountability.

A number of of OpenAI’s ideas — safety-first when values battle; nudging customers towards real-world assist; reinforcing that the chatbot isn’t an individual — are being articulated as teen guardrails. However a number of adults have died by suicide and suffered life-threatening delusions, which invitations an apparent follow-up: Ought to these defaults apply throughout the board, or does OpenAI see them as trade-offs it’s solely prepared to implement when minors are concerned?

An OpenAI spokesperson countered that the agency’s security strategy is designed to guard all customers, saying the Mannequin Spec is only one element of a multi-layered technique.

Li says it has been a “little bit of a wild west” up to now relating to the authorized necessities and tech corporations’ intentions. However she feels legal guidelines like SB 243, which requires tech corporations to reveal their safeguards publicly, will change the paradigm.

“The authorized dangers will present up now for corporations in the event that they promote that they’ve these safeguards and mechanisms in place on their web site, however then don’t comply with via with incorporating these safeguards,” Li stated. “As a result of then, from a plaintiff’s perspective, you’re not simply the usual litigation or authorized complaints; you’re additionally potential unfair, misleading promoting complaints.”